While much of the world was focusing on cryptocurrencies and NFTs as the advent of “web3” thanks to ridiculous profits covered by the media, a quieter industrial revolution has been happening that truly has the power to revolutionise the way our world works.

Unless you were involved in the tech space, it would have been easy to brush aside artificial intelligence as still a pipe-dream out of a Philip K Dick novel. Sure there were advancements, but we were still a long way off anything useful.

That is until November 2022, when the big brains at OpenAI unleashed ChatGPT on to the world. This wasn’t the first time OpenAI was making headlines. In 2019-2020 GPT3’s public launch was scrutinised for its ability to be abused by bad actors in the creation of fake news, and OpenAI’s DALL-E has been making waves in the world of digital art. Aside from Open AI, Disney’s latest series of “The Mandalorian” has shown us that actors can live in their young forms forever, thanks to the technology of deep fakes. But none of these products seemed to penetrate the news cycle quite like ChatGPT did. With a chat based interface, interaction was wonderfully simple, and it could seemingly do everything. Make recipes, write code, develop your social media content (no I didn’t use it for this). Start up message boards were flooded with people discussing how to start businesses using the technology, while just as many were claiming it was the beginning of the end for a human based labour force. But what is ChatGPT really capable of? Does it spark the redundancy of copywriters the world over? Are programmers everywhere going to be out of a job in 6 months. What does it mean for companies like Intuety, who are making their own AI products?

Disclaimer: I am making a number of simplifications of transformers and conversational chatbots to illustrate, briefly, how they work, how they “think” and what are the flaws. I am aware that transformers and language models are hugely complex multifaceted data-models

What is ChatGPT really?

I do not speak French. I did French up to GCSE and it was my lowest grade. But I do know enough French words to throw them in to some semblance of an order. To a fellow non french speaker, it sounds like french. To a French speaker, its gobbledygook. Given enough sentences in French, I might be able to make sentences that look even more like French, since I’ve seen more examples. Given millions, or billions of examples of French sentences, the likelihood that I make a sentence that is genuinely correct increases. I can know that “Je” is often followed by “suis”, while not knowing what either word means. Given a trillion French sentences and far more processing power than my brain can manage, I may even be able to trick a few French speakers in to thinking I can actually speak French. Infinite amount of monkeys and infinite amount of typewriters…

ChatGPT is a chatbot. A conversational interface to a computer based system. These are nothing new. In 1950 Alan Turing proposed what is now known as the “Turing Test” for machine intelligence. A test where given interactions with two possible “machines”, can a user differentiate between the human, and the machine. Is the computer sufficiently “human” to fool us? As early as 1966, solutions such as ELIZA have been attempting to tackle this challenge. You likely would have used them before as a first contact with a companies customer support. It is more recent however, that we have had the processing power, distributed processing technologies, and vast volumes of training data required to make these chatbots appear truly smart. By using a set of technologies called “Transformers”, and training them on the vast array of content on the internet, we are able to teach computers how we speak. This is what ChatGPT’s aim is in its most boiled down form. To give an answer to a question that appears human.

What isn’t it?

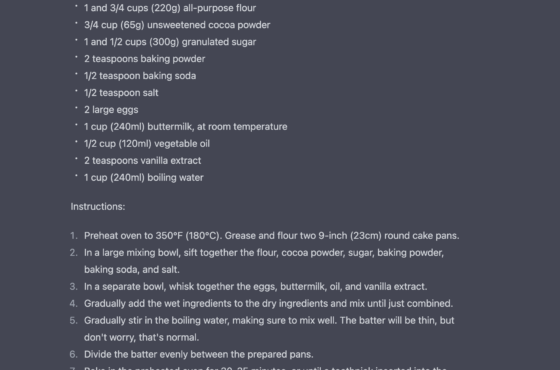

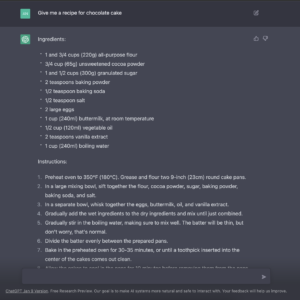

ChatGPT is like a politician. It says what it thinks you want to hear, but doesn’t actually know anything. It is a language model, not a knowledge model. Given the request to write a recipe for chocolate cake, its answer will be entirely based on what it thinks it wants you to hear. The words “Recipe”, “Chocolate” and “cake” will be closely associated with “flour” “sugar” and “mixing” and thanks to the vast arrays of recipes on the internet, is it able to order those words in the most common way. But ChatGPT does not know what a recipe is. It has no idea that sugar is sweet, or that baking powder is a rising agent. You can ask it “what is flour?” and it will give you an answer, but to an AI chatbot, that answer is just words, strung together in the right order.

What happens when its wrong?

Again, much like a politician, when its wrong, its “confidently wrong”. I.E. It will present the incorrect information with the same level of confidence as the correct. There is nothing to differentiate. Its all just words. If your generated recipe calls for 15 eggs and 200g of flour, it doesn’t know that those ratios are widely incorrect. Again it models language, not knowledge. As more and more people use ChatGPT, new ways to trick it and areas it falls short are coming in to view. However the more people use it, the more data it has to train on (that’s why it’s currently free).

What does it mean for AI businesses?

We at Intuety have been building an AI powered solution for Risk and Safety management for over three years. We use the same “transformer” technology in our semantically aware recommendation engine as OpenAI (albeit a different model). So when we started exploring what ChatGPT can do, and seeing its abilities, the knee jerk reaction was…. oh ****!

But as we started to scrutinise its output, more and more flaws began to show up. As we tried delving in to increasingly niche topics, the same generic information was regurgitated again and again, the exact same words, in the exact same order. As we asked more complicated questions, more of its answers were simply wrong. Remember our earlier differentiation between language model and knowledge model. Intuety is a knowledge system consisting of 10s of thousands of datapoints, and 100s of thousands of relationships and associations. It doesn’t just know that the words “hard” and “hat” go together. It knows that a hard hat is a piece of PPE. That it protects the wearer from fallen objects, and much like a human, as our knowledge system ingests more data, its knowledge will grow.

Thats not to say that chatbots and language models are useless. They can form the basis of a new generation of human computer interaction as we have been seeing in products such as Amazon’s Alexa, Apple’s Siri, or Google Assistant. Not just for input, but for output too. “Alexa, what are this risks associated with scaffolding”, “What are the mitigations to slips trips and falls” are a more natural form of interaction than a mouse and drop down boxes. Where Intuety provides the knowledge, language models can provide the “fluff”, the boiler plate communication around our data. This is already demonstrated on our platform where we automatically create summarisations of safety briefings and guidance documents.

Should I start a business that uses ChatGPT?

Startup message boards and LinkedIn have been awash with articles about starting a business that uses ChatGPT. How to get rich off ChatGPT. Products like ChatGPT or GPT3 do indeed lower the barrier for entry in to the market. However those plans have been laid to waste more recently as ChatGPT has reached capacity, with queues, slow response times, or full on outages setting Twitter ablaze with complaints. There is folly in starting a business off the back of another companies technology or API (been there done that, got the failed startup t-shirt), and OpenAI has been transparent that ChatGPT in its current guise is not sustainable, and the bottom of the page itself clearly states “Free Research Preview“. That ChatGPT and other consumer level AI products are entering the market is hugely exciting. But this technology will not come cheap, even if it is right now. At Intuety, we absolutely see the value in ChatGPT or something like it, but without the unique offering of our knowledge base developed over 3 years of development and data ingestion, we’d be riding on the coat-tails of someone else’s product, and at any time, those coat-tails could be cut. We’re still fully assessing the possibilities AI products like ChatGPT presents to us but we already foresee numerous possibilities to utilise products such as chatbots, language models and more generally trained models to augment and expand our product offering.

So ChatGPT is not the death knell of our business. While it does present us with new challenges, it also presents us with new opportunities. New ways to allow users to interact with our unique selling points that are not replicated by a chatbot.

Effects on the digital economy

The internet has, for a long time, run on an advertisement model. I’ve always said, “if you’re not paying for a product, you are the product”. Meta (formally facebook) has made billions of dollars selling data, and feeding targeted adverts, but right down to the smallest scale, people have been writing coding tutorials and travel blogs, making youtube videos and tiktoks, for a small piece of that advertising pie.

But what happens when Microsoft decides its no longer going to forward you to that food blog, its just going to use AI to generate the recipe for you. What happens when instead of directing a junior coder to your tutorial page, it instead inserts your code directly in to the text editor. Ad revenues will go down, and many peoples businesses, side business or otherwise, may potentially pay the price.

This is AI potentially cutting itself off at the kneecaps. When it no longer becomes viable to run your website, because the biggest players in the search world are no longer directing to websites, but giving AI generated answers instead, the content may no longer be made. The very content the AI tools are trained on.

As previously stated, it also lowers the barrier for entry not just in to AI businesses, but other fields too. ChatGPT can create a youtube script in seconds. It can give the framework of a blog post (and starting is always the hardest part). Dall-E could generate artwork for a game project, and art competitions are having to filter our AI generated entries. While AI is still a long way from replacing people outright, its ripples will be felt across the digital economy. I have seen more than one copywriter claim clients have asked them to no longer write content for them, but to edit ChatGPT’s output, for half the cost.

That is to say nothing of the morality and legality of utilising the wider internet as a training set for AI models. If OpenAI starts making money from its chocolate cake recipes, which were trained from all the other recipes on the internet, should the authors of those recipes be compensated? The scrapers and bots used to extract the text data do not trigger ads for the pennies of ad revenue a publisher might get for a site visit. You can bet if I trained a language model on the Harry Potter collection and asked it to generate a story about a boy wizard who fights a dark lord and published it, the Rowling estate’s lawyers would be on me like ants on sugar. There are indeed already lawsuits against Microsoft for Github Autopilot, which while claiming to be only trained on public code repositories, has been shown to be reproducing peoples private code, complete with comments. As well as Github quietly changing its licensing to allow it train on hosted code. There is also a question of, licensing of open source code. For example, the Creative Commons Noncommercial license, which would restrict the commercial use of publicly available code (and is also prevalent in digital art, and the 3d printing community) , does this include training on it for a commercial product, and is this license superseded by Github’s usage licence. If you’re a lawyer in the space of digital copyright, you are potentially in for an interesting, and profitable few years.

In Conclusion

AI, and more specifically consumer level AI services have the potential to trigger yet another industrial revolution (is that the third? or forth now?). Its ripples will be felt across the digital and wider economy. For Intuety, consumer AI products present huge opportunities rather than risk. Tools and systems built on language models can advance, supplement and augment those built on knowledge models and vice versa. Nothing is built in isolation, with everyone set to benefit from continued attention, research and investment in AI/ML technologies. A rising tide lifts all ships. You just need to be sure you aren’t tied to the bottom.

But what do you think. Are we doomed to servitude to our AI overlords? Are older products going to be wiped out thanks to AI. Are you starting a business off the back of ChatGPT?

Addendum

Between writing this article and publishing, more major moves have been made in the AI space that are worth mentioning. Firstly, Microsoft’s announcement that ChatGPT will be directly integrated in to Bing. I expected my prediction of a AI chat based search engine would come to pass. I didn’t expect it to be so quick. Given there continues to be backlash against AI “stealing” peoples work, I expect those arguments to expand from art, to text based content. Microsoft has said they are working of attribution and reporting sources. When quizzed further, they didn’t explain how. I doubt they know themselves. How do you make attribution to millions of articles?

In addition, Google announced Bard. Its answer to Chat GPT and Bing’s subsequent integration. After a glaring error was shown during its demonstration (incorrectly attributing the first photo of a planet to the James Webb Space Telescope), Google (or should I say Alphabet) subsequently lost $100 Billion from its market cap. This shows three things. It proves the argument above. These language models lack knowledge. It knew “photo of planet” followed “telescope” somewhere in a paragraph. What it said was grammatically correct. But factually wrong. Secondly, it shows that people WILL take what AI says at face value. In this instance, the person putting together the demonstration video was either too rushed (trying to beat MS’ announcement), or didn’t think to fact check. Recent history has shown this will be an increasing trend, and I worry about AI outputs being influenced by third parties, for commercial or political gains. Thirdly, the loss in Alphabets valuation shows a definite hype bubble around AI akin to the “blockchain” hype of a few years ago. That the stock price is so sensitive to a single mistake, as opposed to when Google shuts entire products, is telling. It does however prove that the markets are seeing AI, ChatGPT, and Bing, as a definite threat to Google’s Advertising revenue. The economy will change. I’m not worried about little old Google. But I think content creators will suffer.